Beyond Traditional AI: Embracing Multimodal Challenges with Meta-Transformer

Introduction

In the realm of pharmaceutical research, the quest to unlock groundbreaking insights often requires navigating through a vast sea of diverse data modalities. Imagine a powerful tool that can seamlessly process and integrate information from text, images, audio, 3D point clouds, video, graphs, and more, transcending the limitations of conventional AI approaches. Enter “Meta-Transformer: A Unified Framework for Multimodal Learning” – a cutting-edge solution that holds the promise to revolutionize your data analysis, empowering you to extract meaningful knowledge and accelerate discoveries from the rich tapestry of pharmaceutical data. In this blog post, we dive into the technical intricacies of Meta-Transformer – Meta-Transformer: A Unified Framework for Multimodal Learning by Zhang et al. –, revealing how this transformative model could be the key to unlocking new frontiers in pharmaceutical research.

The Allure of Transformers

In 2017, the introduction of the transformer architecture by Vaswani et al. revolutionized natural language processing. The attention mechanisms in transformers proved incredibly adept at capturing long-range dependencies, leading to improvements in various tasks. Subsequently, researchers explored the potential of transformers in image recognition, audio processing, and more, igniting the spark of curiosity: could transformers pave the way for a foundation model capable of unifying multiple modalities?

Enter Meta-Transformer: Unifying 12 Modalities

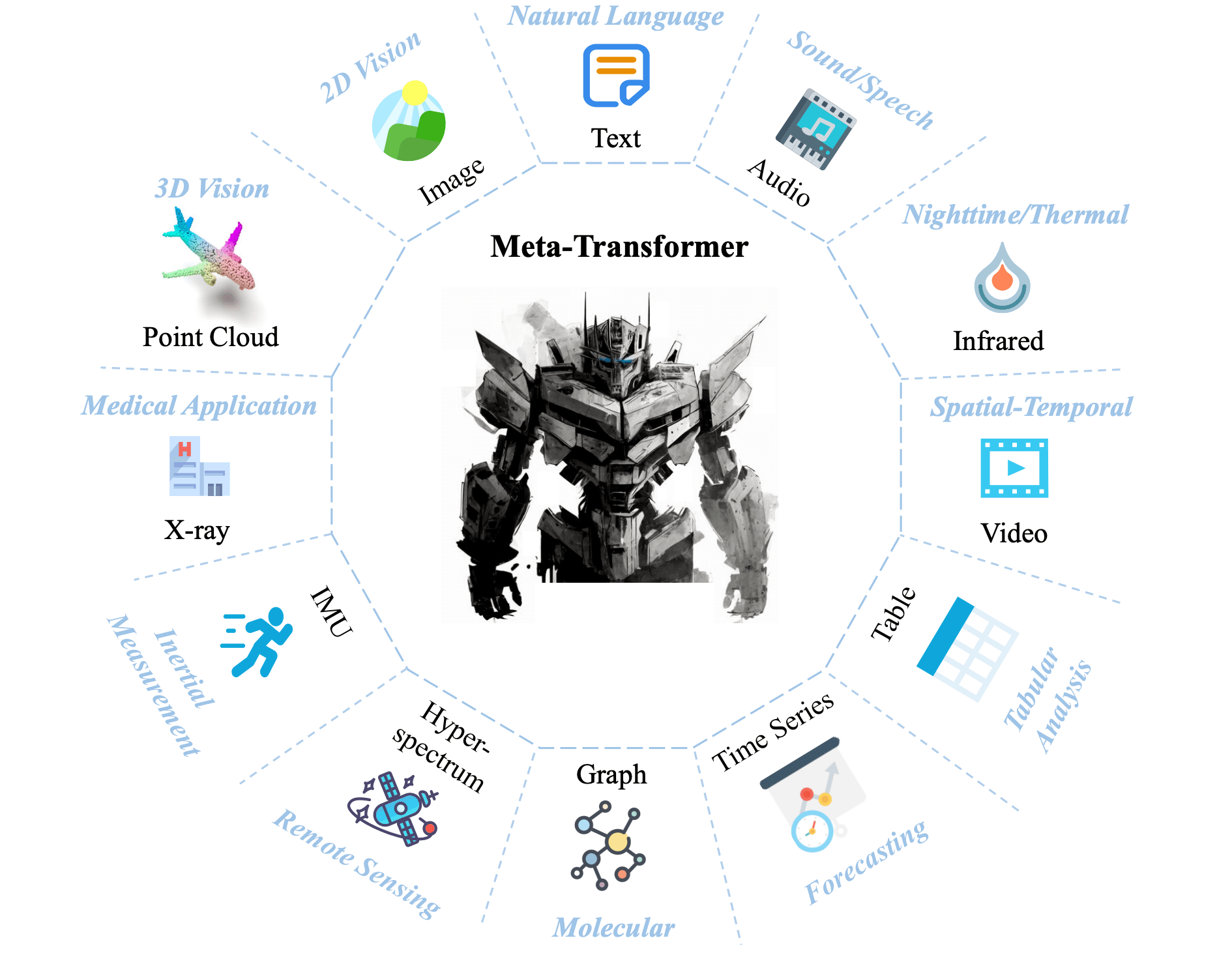

The paper’s authors propose Meta-Transformer, an ambitious and versatile framework that harnesses the power of transformers to process not just a handful, but a remarkable 12 different modalities. These include natural language, images, point clouds, audio, videos, infrared data, hyperspectral data, X-rays, IMU data, tabular data, graphs, and time-series data.

Figure 1: Many data streams can inform AI models. Meta-Transformer provides a single solution to aggregate over many different modes of inputs – yielding state of the art performance on important benchmarks. Image taken from https://kxgong.github.io/meta_transformer/

Meta-Transformer Components

Unified Data Tokenizer: Meta-Transformer starts by transforming data from various modalities into token sequences that share a common embedding space. This pivotal step standardizes the representation of diverse data, effectively bridging the modality gap.

Modality-Shared Encoder: After tokenization, a modality-shared encoder with frozen parameters extracts high-level semantic features from the input data. This encoder remains consistent across all modalities, fostering a unified approach to multimodal learning.

Task-Specific Heads: Meta-Transformer incorporates task-specific heads for downstream applications. These heads update only the relevant parameters for the specific task, while the modality-shared encoder remains unchanged. This strategy ensures efficient learning of task-specific representations without affecting the shared encoder’s effectiveness.

Meta-Transfomer Performance

The Meta-Transformer shows promising performance across various experiments in different modalities:

Text Understanding: On the GLUE benchmark, the Meta-Transformer achieves competitive results, demonstrating its potential for natural language understanding tasks.

Image Understanding: In image classification, object detection, and semantic segmentation tasks, the Meta-Transformer performs well compared to state-of-the-art methods like Swin Transformer and InternImage, especially when fine-tuned with a limited number of parameters.

Infrared, Hyperspectral, and X-Ray Data Understanding: The Meta-Transformer exhibits competitive results in recognizing infrared, hyperspectral, and X-ray data, showing its adaptability to different types of image recognition tasks.

Point Cloud Understanding: In 3D object classification and segmentation tasks, the Meta-Transformer delivers excellent performance compared to other state-of-the-art methods while using significantly fewer trainable parameters.

Audio Recognition: The Meta-Transformer performs competitively in audio recognition, achieving high accuracy scores with fewer trainable parameters compared to existing audio transformer models.

Video Recognition: Although the Meta-Transformer’s performance in video understanding is not as high as some specialized video-tailored methods, it stands out for its significantly reduced number of trainable parameters.

Time-Series Forecasting: The Meta-Transformer demonstrates competitive performance in time-series forecasting on various benchmark datasets with a significantly reduced number of trainable parameters compared to existing methods.

Tabular Data Understanding: The Meta-Transformer shows promise in tabular data understanding, outperforming some existing methods on complex datasets.

Graph Understanding: In graph data understanding, the Meta-Transformer achieves competitive results with a much smaller number of trainable parameters compared to other graph neural network models.

Overall, the Meta-Transformer demonstrates versatility and effectiveness across a wide range of experiments, highlighting its potential for multimodal perception and transfer learning in various domains. Additionally, its ability to perform well with a reduced number of trainable parameters makes it an efficient and practical approach for various tasks.

Conclusion: Forging a Path to the Future

The advent of “Meta-Transformer: A Unified Framework for Multimodal Learning” marks a significant milestone in the field of artificial intelligence and multimodal learning. By addressing the modality gap and devising a cohesive approach to processing 12 different modalities, Meta-Transformer pioneers a new era of unified and comprehensive intelligence systems. The promise of this novel framework extends to pharmaceutical research, where the ability to seamlessly integrate information from various sources will lead to transformative advancements. ZONTAL is providing a framework for multi-modal data storage, harmonization, and advanced analytics that can leverage advanced models like the Meta-Transformer with its life science analytics platform. Want to make better decisions using best of breed data science tools and create the lab of the future? Contact us today!

>

>